🚀 Next-Generation AI Model Achievements

Breakthrough performance metrics and capabilities that set new industry standards

🎯 Record-Low Hallucination Rate

Achieving an unprecedented 4% on FActScore, a significant reduction from the 12% in previous versions. This represents a 67% improvement in factual accuracy, making this model one of the most reliable AI systems for critical information tasks.

🏆 #1 Ranked on LMSYS Leaderboard

Secured the top position with an impressive 64.78% user preference rate in blind comparisons against competing models. Real users consistently choose this model’s responses for their quality, accuracy, and helpfulness.

🐦 Real-Time X Integration

Seamless connection to X (formerly Twitter) provides unmatched current event analysis and social intelligence. Stay informed with real-time data processing and trending topic insights that other models simply cannot match.

❤️ Superior Emotional Intelligence

Demonstrating a 100+ Elo point improvement in natural conversation flow metrics. The model better understands nuance, sentiment, and contextual cues, creating more human-like and empathetic interactions.

⚡ Blazing Fast Inference Speed

Optimized architecture delivers lightning-quick response times, making it ideal for real-time applications. Experience minimal latency even with complex queries, enabling seamless integration into time-sensitive systems.

📚 Massive Context Window

Process and reason across an enormous 2 million token context window, enabling complex document analysis and long-form reasoning. Analyze entire books, research papers, or conversation histories without losing important context.

What Makes Grok 4.1 Fast Different From Other AI Models

On November 19, 2025, xAI released two powerful updates that are shifting how developers think about building intelligent systems. The company introduced Grok 4.1 Fast, a tool-calling model designed for real-world enterprise tasks like customer support and financial analysis, alongside the Agent Tools API, which gives the model access to live X data, web search, secure code execution, and more.

This isn’t just another incremental update. Grok 4.1 Fast comes with a 2 million token context window, meaning it can process massive amounts of information—think entire codebases, long legal contracts, or comprehensive research reports—in one go. That’s roughly equivalent to reading a 1,500-page book and still remembering every detail.

What sets this model apart is how it handles tools. While many AI models can call external functions, Grok 4.1 Fast excels at choosing the right tool at the right time, executing multiple tools in parallel across several turns, and maintaining accuracy even as context grows. On the Berkeley Function Calling v4 Benchmark, it outperforms Gemini 3 Pro and competes closely with Claude Sonnet 4.5 and GPT-5, all while maintaining cost efficiency.

The Agent Tools API runs entirely on xAI’s infrastructure, so you don’t need to manage API keys, rate limits, sandboxes, or retrieval pipelines. Grok decides when and how to use search, code execution, document retrieval, or external MCP servers. For developers, that means less setup time and more focus on building features that matter.

Understanding the 2 Million Token Context Window

Context window size determines how much information an AI model can “see” at once. Most models today offer between 128,000 to 256,000 tokens. Grok 4.1 Fast’s 2 million token window is nearly eight times larger than what many competitors provide.

Think of it like this: imagine trying to answer questions about a textbook while only being able to see one page at a time versus having the entire book open in front of you. The bigger window lets Grok process entire product manuals, multi-year financial reports, or sprawling codebases without breaking them into smaller chunks.

This matters because breaking documents into pieces can cause the model to miss connections between sections. With 2 million tokens, Grok can read a full legal contract, understand how clauses relate to each other, and answer targeted questions about edge cases—all in a single request.

For developers building retrieval-augmented generation systems or search applications, this simplifies architecture. You can pass more context directly instead of building complex chunking and embedding pipelines. Fewer round trips, less infrastructure, faster answers.

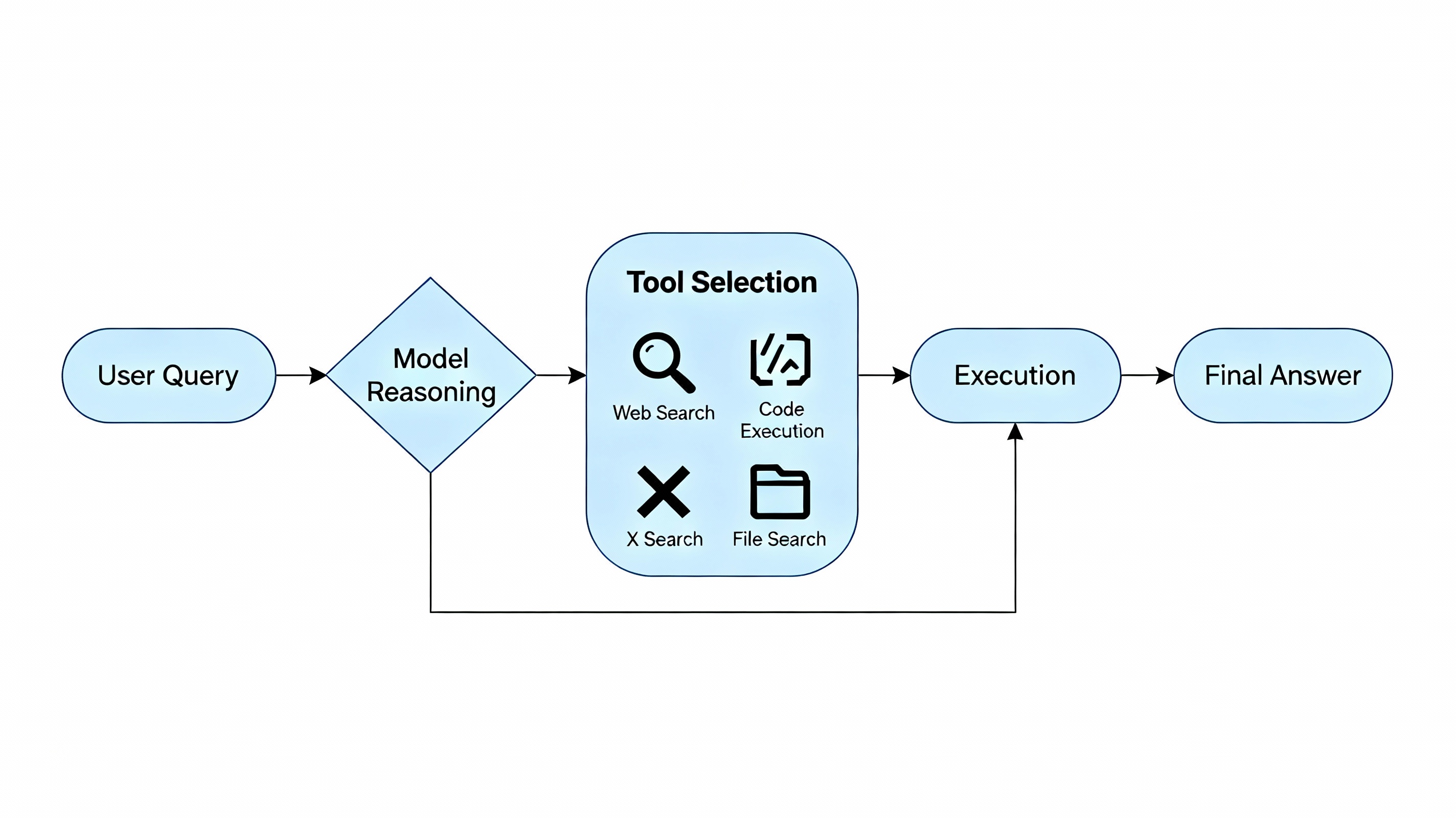

How the Agent Tools API Works

The Agent Tools API is a suite of server-side tools that allow Grok 4.1 Fast to operate as a fully autonomous agent. Here’s what it includes:

Search Tools give the model access to real-time X posts and web search results. When someone asks about breaking news, market sentiment, or trending topics, Grok can pull live data and deliver current insights.

Files Search lets you upload documents and retrieve relevant sections based on meaning and context. The model searches your uploaded files intelligently, returns relevant passages, and includes citations so you can verify sources.

Code Execution runs Python code in a secure sandbox environment. This is useful for data analysis, running simulations, generating charts, or transforming datasets. Grok writes the code, executes it safely, and returns the results—all without you needing to set up compute infrastructure.

MCP Tools connect to external Model Context Protocol servers, allowing access to custom third-party tools. This opens the door to integrating proprietary databases, internal APIs, or specialized software systems directly into the workflow.

All of this happens on xAI’s servers. You make one API call, and Grok handles the rest—deciding which tools to use, executing them in sequence or parallel, and synthesizing the results into a final answer.

Tool Calling Performance: Grok 4.1 Fast vs Competitors

Tool calling is the ability of an AI model to use external functions or APIs to complete tasks. It’s what allows a model to search the web, query databases, execute code, or fetch real-time data instead of relying solely on pre-trained knowledge.

Grok 4.1 Fast was specifically trained for this. Using reinforcement learning in simulated environments, the model practiced using tools across dozens of domains. This training gives it exceptional performance on benchmarks that measure real-world agentic behavior.

On the τ²-bench Telecom benchmark—which evaluates tool use in customer support scenarios—Grok 4.1 Fast scored highest among all models tested, including Gemini 3 Pro, GPT-5.1, Claude 4.5 Sonnet, and even the larger Grok 4 model. It completed tasks accurately while keeping total costs lower than competitors.

On the Berkeley Function Calling v4 Benchmark, which tests overall tool-calling accuracy across various scenarios, Grok 4.1 Fast achieved a 72% overall accuracy. That puts it ahead of Gemini 3 Pro and competitive with Claude Sonnet 4.5 and GPT-5, while costing significantly less to run at scale.

What’s particularly impressive is how Grok 4.1 Fast handles long conversations. Many models lose accuracy as context grows, but Grok maintains performance across its full 2 million token window. On multi-turn long-context benchmarks, it scored 67%, compared to 52.5% for Grok 4 Fast and 22% for Grok 4.

This means the model can carry out complex, multi-step tasks that require dozens of tool calls, maintain coherence across long conversations, and still deliver accurate results—even when the context is packed with information.

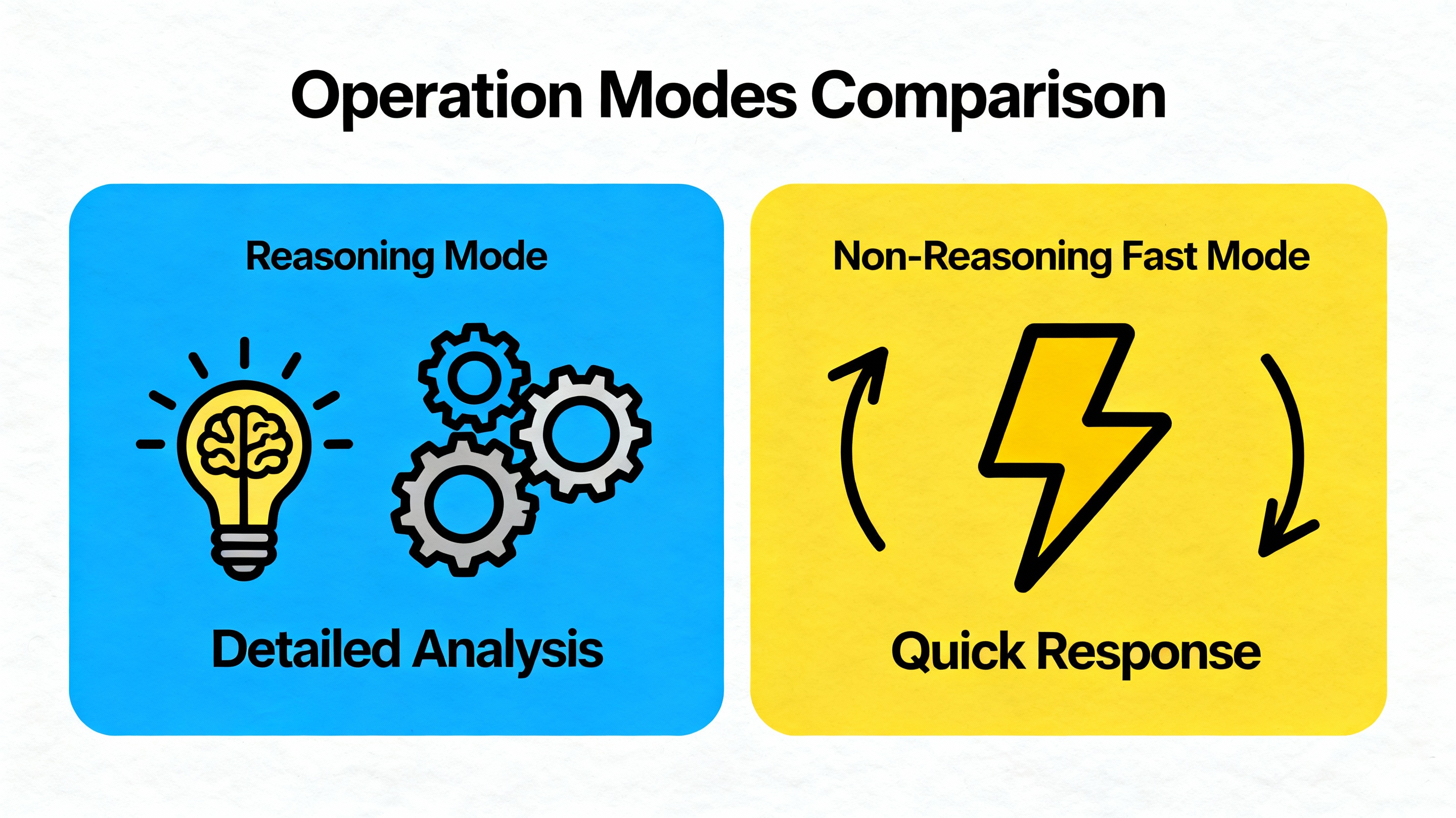

Two Modes: Reasoning and Non-Reasoning

Grok 4.1 Fast comes in two variants, each optimized for different use cases.

grok-4-1-fast-reasoning is designed for maximal intelligence. It uses a chain-of-thought reasoning process, working through problems step-by-step before delivering a final answer. This mode is slower but more deliberate, making it ideal for complex analysis, planning, multi-step workflows, and situations where accuracy is more important than speed.

grok-4-1-fast-non-reasoning is built for instant responses. It skips the internal reasoning step and answers immediately, making it perfect for conversational chat, lightweight tasks, high-volume customer interactions, and scenarios where speed matters more than depth.

Both versions share the same 2 million token context window and tool-calling capabilities. The difference is in how they process information. Reasoning mode thinks before it answers. Non-reasoning mode responds right away.

Choosing the right mode depends on your use case. If you’re building a support bot that needs to respond to simple questions quickly, non-reasoning mode keeps latency low. If you’re analyzing financial documents or debugging code, reasoning mode gives you more reliable, thoughtful results.

Real-World Use Cases for Grok 4.1 Fast

Grok 4.1 Fast shines in scenarios where you need a model to think, search, execute code, and deliver answers autonomously. Here are some practical examples:

Customer Support Automation: Imagine a hotel booking system. A guest emails asking to upgrade their room. Grok can identify the guest by email, pull their booking details from your database, search for available rooms, and modify the reservation—all without human intervention. The Agent Tools API handles the entire workflow, from tool selection to execution.

Financial Analysis: An analyst uploads quarterly earnings reports from multiple companies. Grok reads all of them, compares revenue trends, calculates growth rates, runs Python code to generate charts, and delivers a written summary with citations. The 2 million token window means it can process dozens of reports simultaneously without splitting them up.

Code Review and Debugging: A developer passes an entire codebase to Grok. The model analyzes the architecture, identifies bottlenecks, suggests optimizations, and even writes test cases. Because it can see the full codebase at once, it understands how different modules interact—something smaller context windows struggle with.

Real-Time Market Monitoring: A marketing team tracks brand sentiment on X. Grok searches recent posts, analyzes sentiment, identifies trending topics, and generates a report. Because it has native access to X data, it delivers insights faster than systems that rely on external APIs.

Research and Document Synthesis: A researcher uploads academic papers, government reports, and white papers. Grok searches across all documents, pulls relevant sections, synthesizes findings, and provides citations. The Files Search tool makes this seamless, and the large context window ensures nothing gets missed.

How Hallucination Reduction Improves Reliability

One of the biggest challenges with AI models is hallucination—when the model generates plausible-sounding information that isn’t true. This is especially problematic in high-stakes fields like finance, legal, healthcare, and research.

xAI focused heavily on reducing hallucinations during Grok 4.1 Fast’s training. They used real-world user queries from production traffic and tested the model on the FActScore benchmark, which consists of 500 biography-based questions designed to measure factual accuracy.

The results are significant. Grok 4 Fast had a hallucination rate of 12%. Grok 4.1 Fast drops that to 4%—three times more accurate. On the FActScore benchmark, the error rate fell from 9.89% to 2.97%.

This makes Grok 4.1 Fast more dependable for information-seeking tasks. When it doesn’t know something, it’s more likely to search for the answer using the Agent Tools API rather than making something up. The model also proactively triggers web search when confidence falls below internal thresholds, further anchoring responses in verifiable sources.

For businesses, this means fewer errors in customer-facing applications, more reliable research outputs, and less time spent verifying AI-generated content.

Benchmarks That Matter: Where Grok 4.1 Fast Excels

Benchmarks are standardized tests that measure how well AI models perform on specific tasks. Here’s how Grok 4.1 Fast stacks up:

τ²-bench Telecom measures agentic tool use in real-world customer support scenarios. Grok 4.1 Fast scored 100%, outperforming Grok 4, Gemini 3 Pro, GPT-5.1, Claude 4.5 Sonnet, and Grok 4 Fast. Total cost for completing the benchmark was $105 (approximately ₹8,800), making it both accurate and cost-efficient.

Berkeley Function Calling v4 evaluates overall tool-calling accuracy. Grok 4.1 Fast achieved 72% overall accuracy, placing it among the top performers. For comparison, Claude Sonnet 4.5 and GPT-5 scored similarly, while Gemini 3 Pro’s estimated score was lower. Total cost for the benchmark was $400 (approximately ₹33,400).

Research-Eval Reka tests agentic search and research capabilities. Grok 4.1 Fast scored 63.9 with an average cost of $0.046 per task (approximately ₹3.86)—significantly better than GPT-5 (45.5, $0.107 / ₹8.95) and Claude Sonnet 4.5 (41.2, $0.065 / ₹5.44).

FRAMES measures multi-step reasoning and document synthesis. Grok 4.1 Fast scored 87.6 with an average cost of $0.048 (approximately ₹4), outperforming GPT-5 (86.0, $0.058 / ₹4.85) and Claude Sonnet 4.5 (85.0, $0.078 / ₹6.50).

X Browse is an internal benchmark that evaluates search and browsing on X. Grok 4.1 Fast scored 56.3 with an average cost of $0.091 (approximately ₹7.60)—more than double GPT-5’s score (24.2, $0.198 / ₹16.50) and nearly four times Claude’s score (14.6, $0.126 / ₹10.50).

These benchmarks show that Grok 4.1 Fast delivers frontier-level intelligence while keeping costs manageable, especially for tasks that involve tool use, search, and multi-step reasoning.

Pricing and Free Access Opportunities

xAI is making Grok 4.1 Fast accessible through promotional windows and transparent pricing.

For the next two weeks (until December 3, 2025), both Grok 4.1 Fast models are available for free on OpenRouter. This gives developers a chance to test the model, benchmark it against their current stack, and build prototypes without upfront costs.

The xAI Agent Tools API is also free during this period, allowing you to experiment with web search, code execution, X search, and document retrieval without paying for tool invocations.

Once the promotional period ends, here’s the standard pricing:

📌 Input tokens: $0.20 per million tokens (approximately ₹17 per million tokens)

📌 Cached input tokens: $0.05 per million tokens (approximately ₹4 per million tokens)

📌 Output tokens: $0.50 per million tokens (approximately ₹42 per million tokens)

📌 Tool calls: Starting at $5 per 1,000 successful invocations (approximately ₹420 per 1,000 calls)

Cached input tokens are portions of your prompt that have been sent before and can be reused without reprocessing. This significantly reduces costs for workflows where you repeatedly send the same context—like system prompts or large documents.

Compared to other frontier models, Grok 4.1 Fast offers a strong balance between performance and cost, especially for agentic workflows where tool calling is frequent.

How to Start Using Grok 4.1 Fast

Getting started with Grok 4.1 Fast is straightforward. The xAI API is compatible with OpenAI and Anthropic SDKs, so migration is simple if you’re already using those platforms.

First, create an xAI API key through the console at https://console.x.ai. Once you have your key, you can start making requests using the xAI SDK or any OpenAI-compatible library.

Here’s a basic example in Python:

import os

from xai_sdk import Client

from xai_sdk.tools import code_execution, web_search, x_search, collections_search

client = Client(api_key=os.getenv(“XAI_API_KEY”))

chat = client.chat.create(

model=”grok-4-1-fast-reasoning”,

tools=[

web_search(),

x_search(),

code_execution(),

collections_search(collection_ids=[“your-collection-id”]),

],

)

In this example, you’re enabling web search, X search, code execution, and document search. Grok will automatically decide when to use these tools based on the user’s query.

If you prefer to try Grok 4.1 Fast without writing code, you can access it for free on OpenRouter during the promotional period. This is a great way to test capabilities, compare outputs with other models, and see if it fits your use case before committing to a paid plan.

For developers building production applications, the xAI API documentation provides detailed guides on authentication, tool configuration, streaming responses, error handling, and best practices.

When Grok 4.1 Fast is the Right Choice

Grok 4.1 Fast isn’t the perfect model for every situation, but it excels in specific scenarios:

✅ Choose Grok 4.1 Fast if you need long context understanding. The 2 million token window handles entire codebases, multi-year reports, or large document collections without chunking.

✅ It’s ideal for agentic workflows where the model needs to search, execute code, retrieve documents, and synthesize results autonomously. The Agent Tools API removes the need for custom orchestration layers.

✅ For cost-sensitive enterprise workloads, Grok 4.1 Fast delivers frontier performance at a lower total cost than competitors, especially when tool calling is frequent.

✅ If you’re building applications that rely on real-time X data or live web search, Grok’s native integrations give you faster, more accurate results than systems that rely on external APIs.

✅ It’s a strong fit for customer support automation where the model needs to pull information from multiple sources, execute actions like booking updates, and maintain context across long conversations.

✅ For research and document synthesis, the combination of large context, semantic search, and citation support makes Grok 4.1 Fast one of the best options available.

⛔️ On the other hand, if you need image generation, video creation, or multimodal output, Grok 4.1 Fast isn’t designed for that.

⛔️ If your primary use case is simple text generation or lightweight chat without tool use, smaller, faster models may be more cost-effective.

What Comes Next for xAI and Grok

xAI is positioning Grok 4.1 Fast as the foundation for a new generation of agentic AI applications. The combination of tool-calling excellence, massive context windows, and server-side execution opens the door to more autonomous, intelligent systems.

The company is actively expanding its data center infrastructure to support large-scale AI workloads, with facilities in Memphis, Tennessee, and other locations across the United States. This infrastructure build-out is designed to handle next-generation AI models and support enterprise customers with high-volume, mission-critical applications.

xAI is also exploring deeper integrations with X, allowing Grok to access richer data streams, engage with live conversations, and provide more contextually aware responses. This positions Grok uniquely in the market, with access to real-time social data that competitors can’t replicate.

For developers, the free promotional period ending December 3, 2025, is an opportunity to experiment, benchmark, and build prototypes before committing to production deployments. The xAI API’s compatibility with OpenAI and Anthropic SDKs makes migration easy, and the transparent pricing structure helps teams forecast costs accurately.

As frontier models become more capable, the bottleneck shifts from raw intelligence to practical usability—how fast can the model respond, how reliably does it execute tools, how well does it maintain context across long conversations. Grok 4.1 Fast is designed to excel in these areas, making it a compelling choice for teams building the next generation of AI-powered applications.