GLM-4.6: Performance & Cost Efficiency

A detailed comparison of GLM-4.6 against Claude Sonnet models in performance, cost, and capabilities

Near Performance Parity

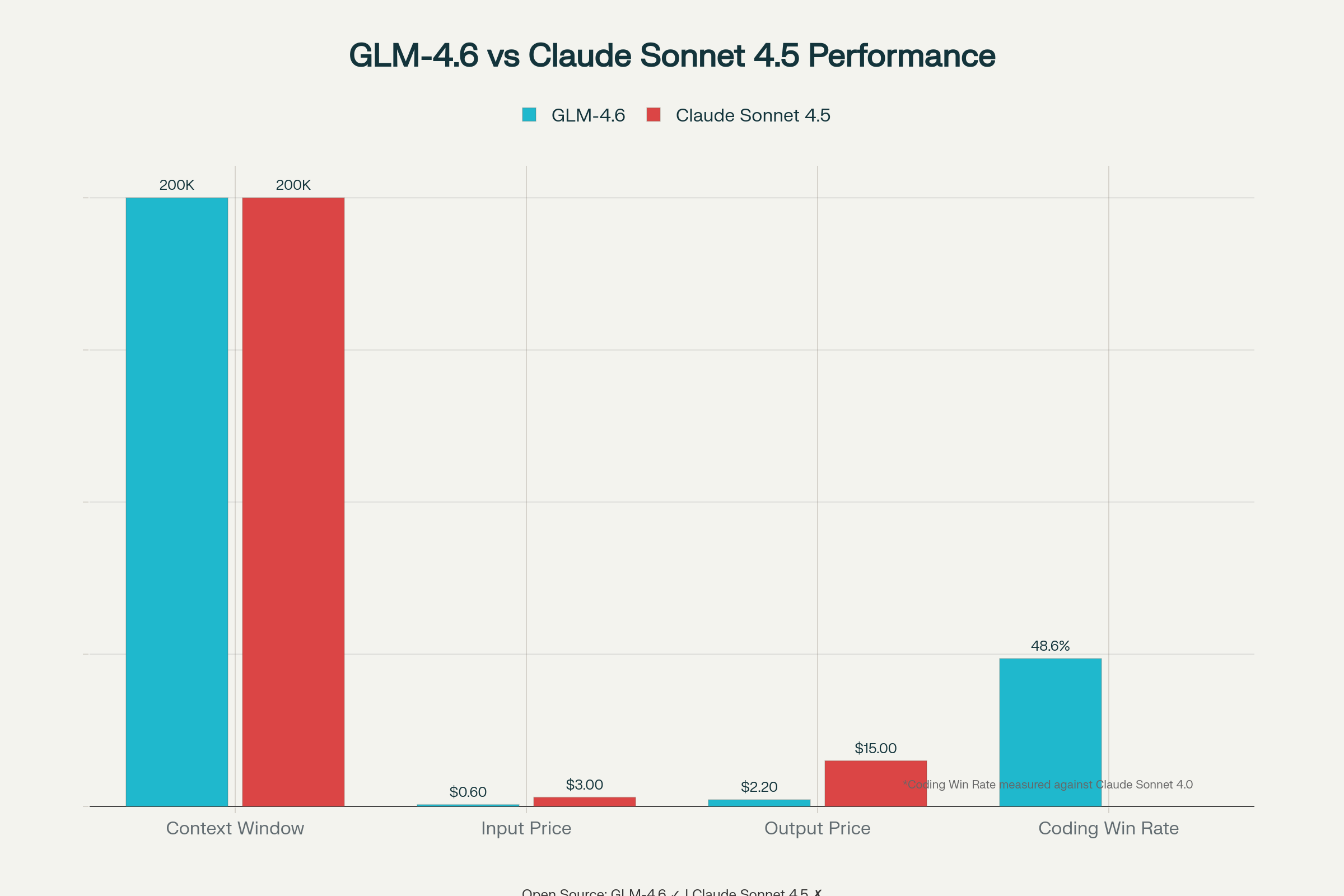

GLM-4.6 achieves an impressive 48.6% win rate against Claude Sonnet 4 in real-world coding tasks, demonstrating competitive performance against one of the leading AI models.

5x More Cost-Effective

Priced at just $0.60/$2.20 per million tokens compared to Claude’s $3/$15 pricing structure, GLM-4.6 delivers similar performance at one-fifth the cost, making advanced AI capabilities more accessible.

30% More Token Efficient

Uses significantly fewer tokens per task (651,525 vs 800,000-950,000 for other models) while maintaining performance, resulting in faster processing and lower operational costs.

Expanded Context Window

Features a 200K token input context with 128K maximum output, matching Claude’s context limits and enabling processing of longer documents and more complex tasks.

Open Source Advantage

Available with open weights for local deployment, unlike proprietary Claude models, giving developers more flexibility, customization options, and independence from API-based services.

Coding Performance Gap

Despite significant improvements over previous versions, GLM-4.6 still lags behind Claude Sonnet 4.5 in coding ability, indicating areas for future development and enhancement.

Is GLM-4.6 the Open Source Alternative That Can Replace Claude Sonnet 4.5?

The AI community is buzzing with excitement over GLM-4.6, Zhipu AI’s latest open-source model that promises to challenge the dominance of Claude Sonnet 4.5. But can this free alternative truly match the performance of Anthropic’s premium offering? Let’s examine the evidence and see if GLM-4.6 lives up to its bold claims.

What Makes GLM-4.6 Special? Understanding the Open Source Contender

GLM-4.6 represents a significant leap forward in open-source AI technology. Built with a 355B-parameter Mixture of Experts (MoE) architecture, it features an impressive 200K token context window and is completely free to use with open-source weights available on HuggingFace. The model was specifically designed to excel in coding, reasoning, and agentic tasks—the same areas where Claude Sonnet 4.5 has established its reputation.

What sets GLM-4.6 apart is its accessibility. Unlike Claude Sonnet 4.5, which requires expensive API calls, GLM-4.6 can be deployed locally or accessed through Z.ai’s affordable API at just $0.60 (₹50) per million input tokens and $2.20 (₹185) per million output tokens. This represents an 80% cost reduction compared to Claude’s $3 (₹250) input and $15 (₹1,250) output pricing.

Performance Reality Check: How GLM-4.6 Actually Measures Against Claude Sonnet 4.5

The benchmark results tell a nuanced story. GLM-4.6 achieves near parity with Claude Sonnet 4, scoring a 48.6% win rate in real-world coding tasks measured by CC-Bench. However, Zhipu AI openly acknowledges that GLM-4.6 still lags behind Claude Sonnet 4.5 in coding ability.

Here’s what the performance data reveals:

Coding Performance:

📌 GLM-4.6 matches Claude Sonnet 4 in most coding benchmarks

✅ Shows significant improvements over previous GLM versions

⛔️ Still trails Claude Sonnet 4.5 in specialized coding tasks

Efficiency Gains:

➡️ Uses 15% fewer tokens than GLM-4.5 for the same tasks

➡️ 30% more efficient in token consumption compared to competitors

➡️ Faster inference and reduced computational costs

Real-World Applications:

The model integrates seamlessly with popular coding tools like Claude Code, Cline, Roo Code, and Kilo Code. Early adopters report excellent performance in front-end development, tool creation, and data analysis tasks.

Where GLM-4.6 Shines: Advantages Over Claude Sonnet 4.5

Cost Effectiveness Beyond Compare

The most compelling advantage is financial. For developers processing large volumes of text, GLM-4.6’s pricing structure can save thousands of dollars monthly. A typical development team using 10 million tokens monthly would pay approximately $22 (₹1,850) with GLM-4.6 versus $150 (₹12,500) with Claude Sonnet 4.5.

True Open Source Freedom

GLM-4.6 offers complete transparency with open weights, allowing developers to:

- Inspect and modify the model architecture

- Deploy on private infrastructure for sensitive projects

- Avoid vendor lock-in concerns

- Customize the model for specific use cases

Extended Context Window Parity

Both models feature 200K token context windows, but GLM-4.6’s implementation is optimized for efficiency, handling large documents and complex conversations without the premium pricing of Claude.

Limitations and Honest Assessment: Where Claude Sonnet 4.5 Still Leads

Coding Sophistication Gap

While GLM-4.6 performs admirably in general coding tasks, Claude Sonnet 4.5 maintains an edge in complex software engineering scenarios. SWE-bench Verified results show Sonnet 4.5 achieving 77.2% versus GLM-4.6’s 68.0% in fixing real open-source code bugs.

Enterprise-Grade Reliability

Claude Sonnet 4.5 offers more mature enterprise features:

- Established support infrastructure

- Proven reliability in production environments

- Advanced safety and alignment features

- Better performance in multimodal tasks

Specialized Domain Performance

For highly specialized applications in finance, legal, or medical domains, Claude Sonnet 4.5’s extensive training and fine-tuning still provide advantages that GLM-4.6 hasn’t fully matched.

Local Deployment: Making GLM-4.6 Work for You

One of GLM-4.6’s strongest advantages is local deployment capability. The model supports:

Hardware Requirements:

- 2–4× 80GB A100/H800 GPUs for full deployment

- Quantized versions (Int4/FP8) for single GPU setups

- Support for domestic Chinese chips (Cambricon, Moore Threads)

Deployment Options:

python -m vllm.entrypoints.api_server

–model /path/to/glm-4.6

–dtype float16

–quantization int4

–tensor-parallel-size 2

text

The model works with popular inference frameworks including vLLM and SGLang, making integration straightforward for technical teams.

Integration with Development Tools: Real-World Usage

GLM-4.6 integrates smoothly with existing development workflows:

Coding Agent Integration:

- Claude Code: Direct model replacement with updated configuration

- Cline and Roo Code: Native support with identical API interface

- VS Code extensions: Compatible through OpenRouter integration

API Access:

Both Z.ai’s direct API and OpenRouter provide access, with comprehensive documentation and examples available.

The Verdict: Strategic Decision Framework

GLM-4.6 serves as an excellent open-source alternative to Claude Sonnet 4.5, but the choice depends on your specific needs:

Choose GLM-4.6 when:

✅ Cost optimization is critical

✅ Open-source transparency matters

✅ Local deployment is required

✅ General coding and reasoning tasks dominate your workload

✅ You need to avoid vendor lock-in

Stick with Claude Sonnet 4.5 when:

⛔️ You need the absolute best coding performance

⛔️ Enterprise support and reliability are non-negotiable

⛔️ Specialized domain expertise is crucial

⛔️ Budget isn’t a primary constraint

Future Outlook: The Open Source Revolution

GLM-4.6 represents more than just another model release—it signals the maturation of open-source AI to near-commercial quality levels. While it may not completely replace Claude Sonnet 4.5 in every scenario, it offers a compelling alternative that delivers 80–90% of the performance at 20% of the cost.

For many developers and organizations, GLM-4.6 provides the sweet spot of performance, cost-effectiveness, and freedom that makes it the practical choice. As the open-source community continues to contribute improvements and optimizations, models like GLM-4.6 will likely close the remaining performance gaps while maintaining their fundamental advantages.

The question isn’t whether GLM-4.6 is perfect—it’s whether it’s good enough for your specific use case while offering the benefits of open-source development, transparent costs, and deployment flexibility. For most applications, the answer is increasingly “yes.”